Your Product. Our AI. Designed for the Edge

Seamlessly integrate industry-leading AI features like stem separation into your product, and transform the way your customers experience sound.

Edge Audio: Elevating Experiences & Transforming Industries

Embedded AI empowers industry leaders to add powerful new features into their products, creating transformative customer experiences.

In-vehicle Audio,

Smart Home,

Edge Devices

Smart Speakers,

Wearables,

Phones, Computers

Speakers, Mixers,

Interfaces

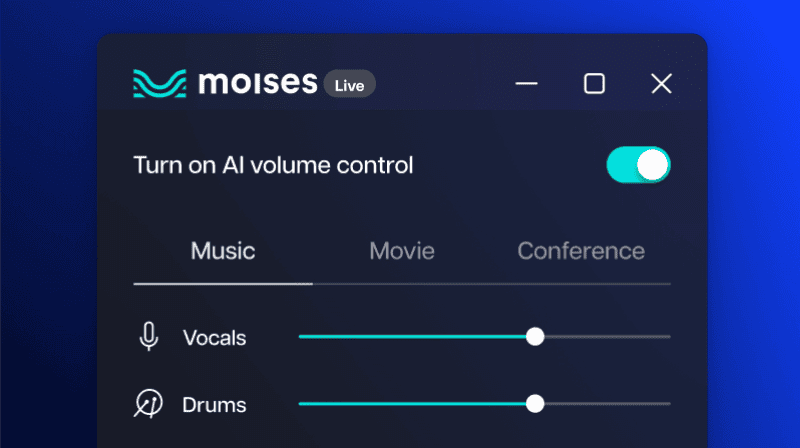

Real-time audio separation and control

Separate and edit audio stems in real-time. See a demonstration.

Accurate, low latency processing

With broad support for a wide range of chipsets, Music AI helps you put AI technology wherever your customers need it most.

High-performance, localized AI

AI-powered audio features that run with or without an internet connection, to offer your customers enhanced privacy, security, and reliability.

Edge Audio Solutions for Your Next Product

On-Device AI

Integrate Music AI technology into your desktop or mobile application to run our leading AI-powered audio processing in real-time.

Embedded AI

Differentiate your products by embedding our models directly into your hardware. With lightweight model options and support for embedded Linux and RTOs (in development), we’ll help you put AI wherever your customers need it most.

The Music AI Embedded SDK: Designed for Real-World Deployment

The Music AI Embedded SDK is a collection of artificial intelligence models designed to run stem separation and other AI-powered audio services locally on everything from the latest PCs to small embedded systems.

Supporting Major Chipsets

Why Music AI?

Customizable Configuration

Designed with the specific challenges of edge AI in mind, our embedded SDK includes models with a flexible range of specifications, allowing development teams to customize to meet their specific needs.

Broad chipset capability

Optimized latency

Lightweight model options

Expert implementation assistance

Licensing and Annotation

Our proprietary approach to data preparation and model training ensures unmatched performance and quality.

Largest catalogue of licensed materials

Ethically trained models

Faster, more accurate validation

Scalable annotation for increased accuracy across multiple models

Hybrid AI Solutions

Music AI is a leading provider of both cloud and edge audio AI. If needed, we can help you build a hybrid solution that integrates the benefits of both environments.

Leading API with more than 50 audio AI modules

SDK to run key task locally in real-time

Integration flexibility for both environments

Scalable annotation for increased accuracy across multiple models

Frequently Asked Questions

Stem separation is a term in audio production for when a track is divided into its individual parts. For example, a professional audio engineer might choose to separate the bass, vocals, and drums, so that they can make adjustments to each part separately. These capabilities are useful in other industries, as well. For example, cinematic stem separation can also allow filmmakers to lower the volume of background noise in a scene, so that the audience can better hear the characters' voices.

Embedded stem separation is when stem separation is built directly into the hardware of a device. For example, a product team might want to embed our stem separation models into an audio surround sound system, so that their customizers could personalize their audio experience in real time while watching a movie.

While you could reasonably call embedded AI a type of on-device AI, we’ve found that the requirements of embedded systems are unique enough that it’s helpful to view these as distinct categories. We use on-device to refer to AI models running on computers, tablets, and other consumer devices. We use embedded AI to refer to AI models running on lower-powered devices, much closer to the hardware.

Our API is an excellent tool for accessing our many AI models conveniently at scale. This is a cloud-based service where the models run remotely. There are pros and cons of both edge AI and internet-based services like our API. For example, models from the API are always up to date without any need to update on your end, and they should be able to run with the highest possible performance, even on hardware where that would otherwise be impossible. Running the models embedded or on-device, however, allows for much lower latency and gives customers the ability to use these features while offline. It’s possible your product might benefit from either or both of these tools, depending on your specific needs.

SDR is a scientific measurement that is widely accepted across the audio research community for assessing stem separation results. It measures the power ratio between the intended source and the distortion artifacts introduced during separation. This matters because the higher the SDR, the better the audio will sound once the stems are separated. Music AI is proud to have industry-leading SDR.